Optimization induced equilibrium networks: An explicit optimization perspective for understanding equilibrium models

Jun 10, 2022·

,

,

,

,

·

0 min read

,

,

·

0 min read

Xingyu Xie

Qiuhao Wang

Zenan Ling

Xia Li

Guangcan Liu

Zhouchen Lin

Abstract

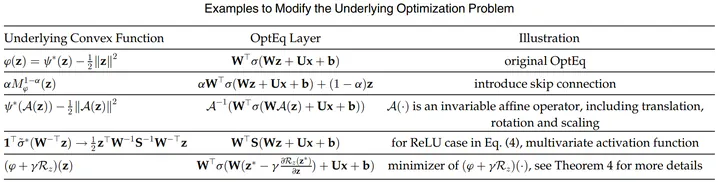

To reveal the mystery behind deep neural networks (DNNs), optimization may offer a good perspective. There are already some clues showing the strong connection between DNNs and optimization problems, e.g., under a mild condition, DNN’s activation function is indeed a proximal operator. In this paper, we are committed to providing a unified optimization induced interpretability for a special class of networks—equilibrium models, i.e., neural networks defined by fixed point equations, which have become increasingly attractive recently. To this end, we first decompose DNNs into a new class of unit layer that is the proximal operator of an implicit convex function while keeping its output unchanged. Then, the equilibrium model of the unit layer can be derived, we name it Optimization Induced Equilibrium Networks (OptEq). The equilibrium point of OptEq can be theoretically connected to the solution of a convex …

Type

Publication

Transactions on Pattern Analysis and Machine Intelligence